Sentiment over generative AI is experiencing a course correction in the wake of Google and Microsoft’s missteps in implementing LLMs.

Publishers are now tackling the real-world implications of a tool that can spit out reams of copy in the blink of an eye for users with very little writing experience. Concerns are mounting over a flood of low-quality AI written stories swamping submission desks. Others, meanwhile, are asking serious questions about where AI is getting the data it’s repurposing.

Editor’s Note: Monetization in the Age of AI

Sentiment over generative AI is experiencing a course correction in the wake of Google and Microsoft’s missteps in implementing LLMs. Publishers are now tackling the real-world implications of a tool…

Updated On: December 1, 2025

Table of Contents

Subscribe to AI insights

- Trending pubtech resources

- Review of pubtech and adtech tools

- Valuable pubtech strategies

By Vahe Arabian

Founder at SODP

Related Posts

-

How to Build Your Own Ad Network: A Step-by-Step Guide

-

A Story of How RollerAds’ Publisher Made $60,000

-

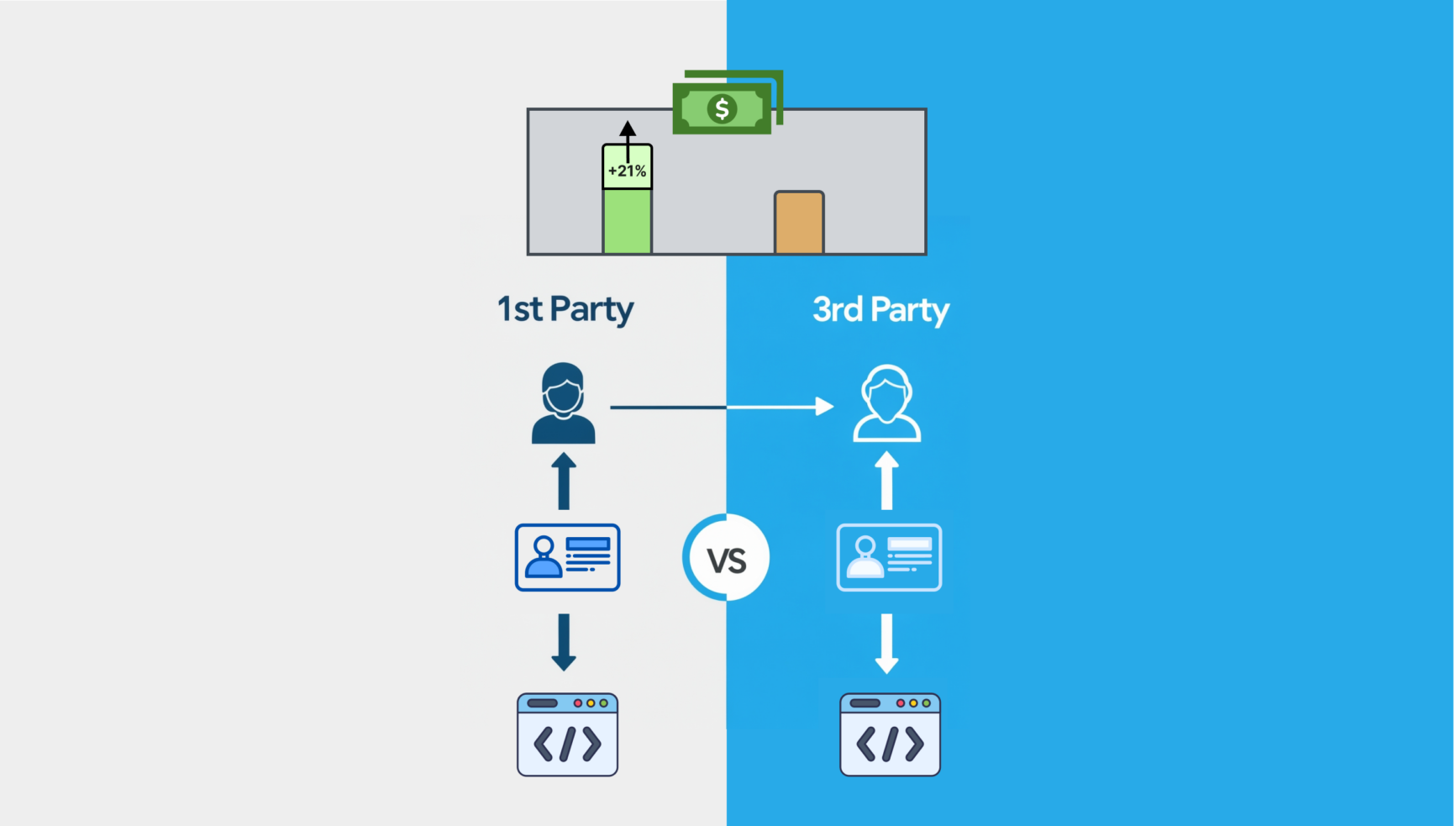

Publisher Used First-Party Data To Cash-In On 4th Quarter

-

The Web’s Largest List Owners Are Winning While Traditional Sites Fall Behind

-

Sites Seeing Higher CPMs Using Ad IDs & First-Party Data Solutions

-

Ethereum-Powered Publishing: Can Crypto Payments Redefine Author Royalties?

-

Building the Optimal Monetization Team

-

Foreign Affairs Magazine: Taking a Niche Product and Punching Above Its Weight

State of Digital Publishing is creating a new publication and community for digital media and publishing professionals, in new media and technology.