Over the past two months, publishers have been slammed with 4 Google system and algorithm updates, particularly the Helpful Content Update (HCU) and core algorithmic update, resulting in traffic slippages across the board.

While my industry colleagues across the board have provided their winner and loser analysis on queries and niches, we’re still focusing and deep in the trenches, taking a similar stand that Mushfiq has shared in minimizing this for our clients and SODP.

So, friends, here are three main tasks you can do to work on the fly but also lead you to change your SEO paradigm and mindset for 2024.

1. Major task: Prune your content based on SERP/competitor-based traffic diagnosis

Don’t take the CNET approach of just deleting all articles that haven’t generated traffic in a defined period; instead, evaluate whether 1) the content dropped off entirely is outdated and simply needs an update and 2) whether the dead weight content you have no longer reflects what your website is today or 3) whether you need to remove the content from your site even though it is driving you some traffic.

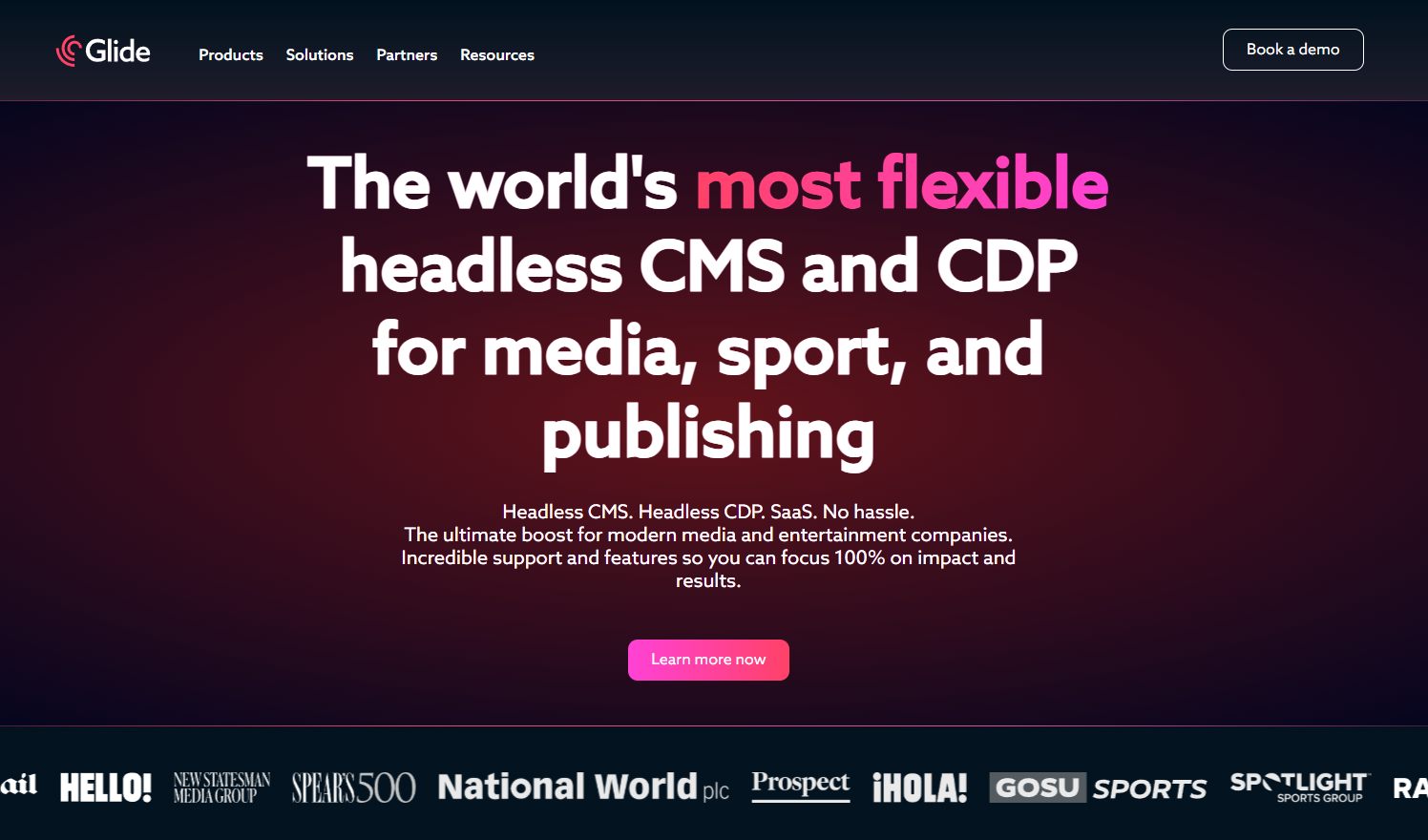

Here’s where Ahrefs Top page report steps in. After filtering the results for traffic decline (as per the screenshot below), if you see across your and competitors’ sites that there’s been a hit on clusters that are down more than 60-70% in that single update, question whether that content will be helpful moving forward as it could weigh down other content and your top stories visibility sitewide.

This signal also means other sites across industries have overdone publishing this content for SEO/traffic gains. It is likely flagged by quality raters to provide precedence to traditionally prominent sites, i.e., celebrity bio articles published not only across entertainment but sports, finance and lifestyle publications, which now only people.com is ranking for.

If the traffic decline has been experienced for several months, then that is a sign of a technical site issue or the need for the freshness and relevancy of your content.

2. Major task: Improve your site architecture

If you’ve kept changing or adding categories/sections pages or tinkering with key internal linking structures like related/in-content article links, pagination, menu and footer structures, then in some way or another impacted your topical authority. To avoid jargon, the change of your taxonomy and grouping of related pages can dictate the weighting and subsequent ranking of your articles.

On SODP’s site, while we rank reasonably well for longtail terms, our cluster guide topics are an opportunity for improvement.

This year, we tinkered with our related posts to focus on article titles as the criteria. However, we saw that newer articles started taking longer to surface in search or see quicker drops once more buried in paginated results. Our menu and footer were also changed to accommodate new products/solutions and company pages to double down on E-E-A-T. Still, after doing a comparative analysis, we realized that the proportion of our site architecture diluted the emphasis on our most important posts.

So leverage your site architecture by:

- Having fixed areas that link your most important content as close to the root domain as possible, i.e., using editor pick on categories

- Showing related articles not only based on a certain period but also contextual relevancy as it will also lead to user satisfaction. We limited our related articles to only show the past two years as most of those pieces have been updated/improved.

- If you have pages/tags/categories that haven’t been updated for a while, then it’s time to consider consolidating. We cut down the number of our categories by half.

- Leveraging your menu to include important resources. We added some of our top tool categories to provide a more direct hierarchy, which wasn’t reflected on our category page alone since it was mixed with other tech-related content.

- Only showing essential company and policy pages where necessary and avoiding repetition in sitewide areas.

For SODP’s site, this was key in stabilizing our traffic drop within a few days and starting to see immediate signs of recovery. It’s early days, but I look forward to sharing more updates in another editor’s note.

3. Major task: Conduct your technical housekeeping/hygiene

I’ve spoken about this repeatedly with our community and clients on our site. Still, especially during core algorithm updates, you see evidence of a higher correlation with traffic drops and increasingly technical issues. Google has this excellent debug article and video that contextualizes the symptoms more deeply.

Besides SEO crawlers, take a deep dive into GSC’s page indexation and crawl stats reports, note and address anomalies ASAP. If site functionalities have been added/removed between core algorithm updates, manage how those URLs are being crawled via your robots.txt file, meta robots tag or canonical tags, depending on the situation and severity.

One shared secret publishers underestimate is internal and external links (links to resources/claims). If this isn’t updated across the board and the 301s and 404s proportionally increase, then the perceived quality of the site decreases, also impacting user satisfaction. So, even if you aren’t updating older content, have processes and people that review and address internal and external link hygiene.

4. Major task: Improve your E-E-A-T with AI [Bonus]

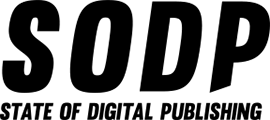

Longer-term shifts/calibrations are happening with Search Generative Experience (SGE) impacting the search results pages and changing the nature of searches to promote more first-hand experiences, irrespective of your site’s authority and other trust signals.

With Google updating their statement to water down the application of generative AI to imply that it is acceptable to use so long as it helps the benefit users with their search needs, I’ve shifted my mindset to see/experiment with better ways to standardize your experience within content with generative AI.

Content from our partners

Jonathan Boshoff is someone I’m looking to at the moment, with his article providing prompts and tools to go beyond ChatGPT to improve your content at scale across various experiences.

![Major task: Improve your E-E-A-T with AI [Bonus]](https://www.stateofdigitalpublishing.com/wp-content/uploads/2023/10/image-4.png)

He’s also created a Helpful Content Update tool and E-E-A-T Competitor comparison tool, which has extended the use of OpenAI API and training to evaluate Google’s documentation to provide interesting recommendations.

![Major task: Improve your E-E-A-T with AI [Bonus]](https://www.stateofdigitalpublishing.com/wp-content/uploads/2023/10/image-5.png)

They are both free and buggy in that they don’t always work, but they can also inspire creating your internal tools for the same applications. Create your SEO tests and conduct the rollout with risk adversity.

While there are cases of lower quality sites doing better with multiple ranking results and publishers feeling unfair treatment and impact on their sites at the moment, I hope some of these things can help provide some control and a long-term view of thriving and shifting your approach in providing better content and user experiences for your audience.