As the months roll by, it’s becoming ever clearer that generative AI is not only here to stay but will have a profound impact on the publishing industry in the long run.

While major publishers have been experimenting with AI for a number of years, it appears quite a few have now created dedicated teams to oversee AI initiatives within their companies.

This isn’t something that should alarm smaller publishers. There’s a reason prompt engineering roles are commanding such high salaries — the task of making generative AI working within an editorial flow is no mean feat and may take years to perfect.

The SODP team is experimenting with AI as well, albeit in an extremely limited capacity. We’re a long, long way from even considering using it as part of our article writing process, but we do see its potential for limited use cases.

I’m not going to delve into the inner workings of our AI project here, simply because it’s so far away from bearing fruit. I’ll save that for a case study at a later date.

For now, what I will say is that publishers stand to benefit from experimenting on small, low-risk tasks. These projects have the potential to provide valuable and relatively inexpensive insight and knowledge as to how generative AI functions and its limitations.

But you don’t need to take my word for it alone. The director of the London School of Economics media think-tank Polis, Charlie Beckett, is also encouraging publishers to “start playing with [AI]”.

I can understand why experimenting with AI might be far down some publishers’ list of priorities. Afterall, there’s a great deal of noise over the challenges the technology poses. Copyright infringement and a rise of misinformation seem to be the main culprits of the day.

AI in the Crosshairs

Media tycoon Barry Diller has urged publishers to consider suing AI companies in order to prevent their content from being “stolen”.

“If all the world’s information is able to be sucked up in this maw and then essentially repackaged … there will be no publishing, it is not possible,” Diller reportedly said during the Semafor Media Summit, before adding: “Companies can absolutely sue under copyright law.”

His comments come around the same time that media intelligence company Toolkits released its Subscription Publishing Snapshot: Q2 2023 report, revealing that generative AI “could hinder

subscription efforts”.

The analysis provider noted that publishers had been caught on the backfoot following ChatGPT’s emergence. It added that the industry was increasingly concerned that the public would be able to use Google’s Bard and Microsoft’s Bing Chat to freely access paywalled content.

This is an issue previously flagged up by my colleague Mahendra Choudhary, who argued that bigger publishers would likely begin blocking AI crawler access to prevent such an infringement.

While content scraping is a legitimate issue, there already appear to be answers that shouldn’t be particularly challenging to implement. Technological challenges should have a technological solution after all.

However, this isn’t the only concern doing the rounds.

Damage Control

Photoshopped images and deepfake videos have been a problem for years, but the rise of generative AI simplifies and speeds up this process significantly.

Fake images created by AI have already begun bleeding over from social media into the mainstream and the news media is increasingly worried about the consequences.

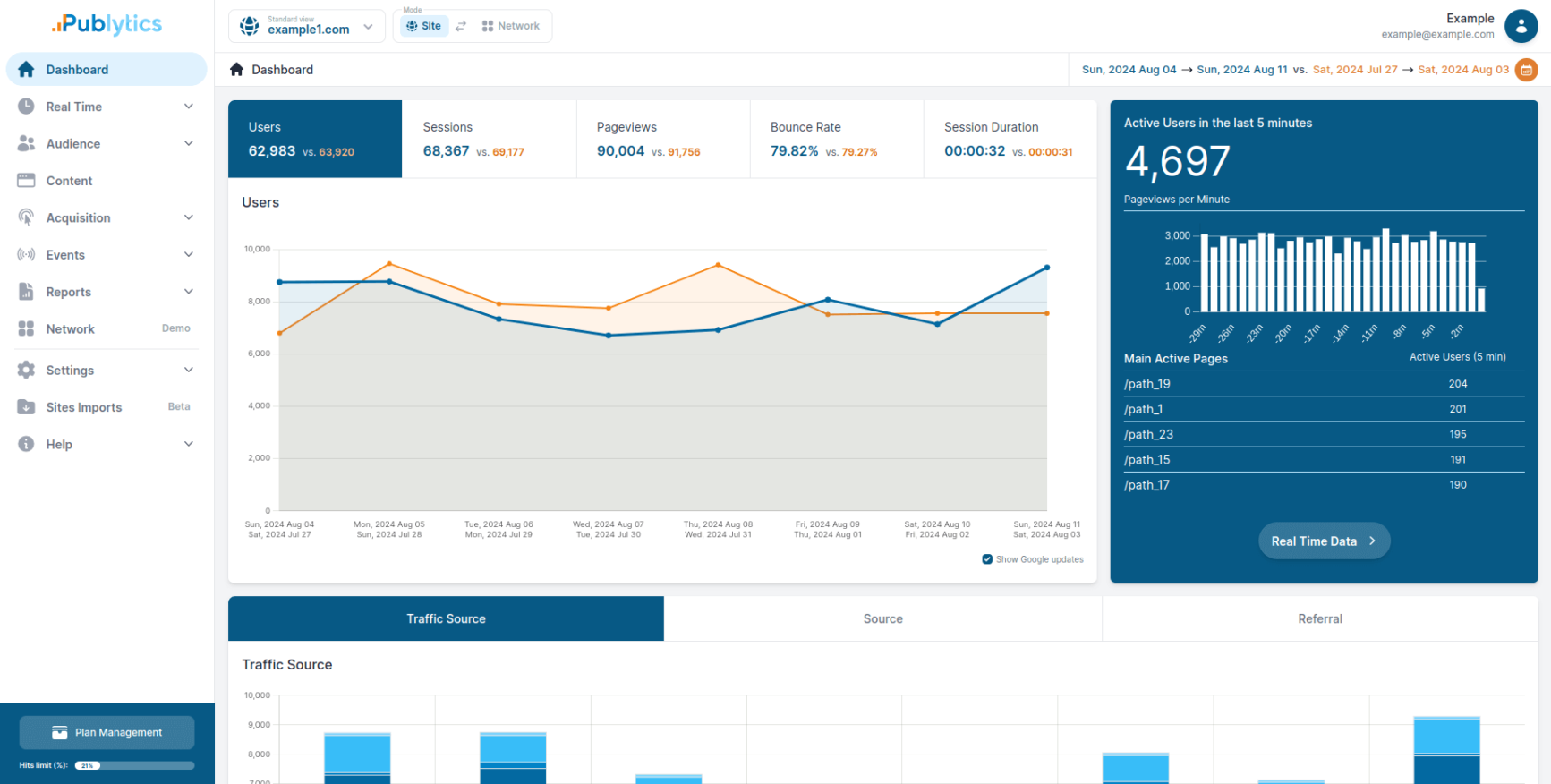

Content from our partners

There will always be challenges whenever a new technology gains enough traction and I expect that there will be teething problems as the media and the general public adapts to the mainstreaming of AI.

With that said, misinformation is hardly a new phenomenon, with WhatsApp being used to spread rumors in India that led to mob killings in 2018. Indeed, there’s an argument to be made here that, between an increasingly skeptical public and publications’ desire to avoid being discredited, fraudulent imagery is less of a threat than some alarmists would have you believe.

I’m not naively optimistic about the future of generative AI, but nor do I believe that there’s cause for panic.

Generative AI is here and publishers need to get to grips with it. But it’s not just media organizations that need to understand its importance, individuals also need to be mulling over its implications for their careers. Will future employers expect candidates to list generative AI in the skills section of their CV in much the same way that other more mundane software skills appear now? Food for thought.