There was a time when speaking commands or questions to a machine and receiving an answer was something out of a science-fiction future. Well, for more than 110 million people in the US alone, that’s their everyday present. According to Gartner, 30% of searches will be conducted via voice in 2020.

Voice is the default interface for the more than 200 million smart speakers that Canalys predicts will exist by the end of 2019. But most smartphones today have installed some sort of digital assistant as well that allows for a voice interface. Research company Juniper Research estimates that by 2023 there will be 8 billion digital voice assistants in use. Most of them will be on smartphones, but smart TVs, wearables, smart speakers and other IoT devices will also experience significant growth in their usage through voice commands.

The digital voice assistant market is dominated by four big players:

- Google Assistant. This digital assistant comes installed with all Android phones as part of the Google app. Of course, it uses Google search to answer your queries. Other Google properties, like YouTube or Google Maps, are also closely tied to the assistant. Companies can expand on the Assistant’s capabilities by developing Actions. These Actions can be used to interact with hardware (“Hey Google, turn off the lights in the living room”) or with any other online service (“Hey Google, give me today’s headlines for my favourite website”).

- Amazon Alexa. Amazon’s digital assistant is available most famously on Amazon’s line of smart speakers, Echo. But in fact, it’s available on over 20.000 devices, including not just smart speakers or wearables, but also TVs and even cars. Alexa also allows the creation of voice apps through Alexa Skills. Amazon even allows the possibility of premium subscriptions for Skills, so publishers can monetize their efforts by offering more personalization options or more detailed coverage. Alexa uses Bing as a search engine.

- Apple Siri. What started in 2011 as an iOS app for the iPhone is now a full-fledged digital voice assistant embedded in all Apple products, including their line of smart TVs, speakers and wearables. Apple offers SiriKit to allow companies to extend their apps and allow voice interaction through Siri. The default search engine Siri uses is Google, although it can be configured to use a different search engine, like Duck Duck Go or Bing.

- Microsoft Cortana. Microsoft’s digital assistant is available in Windows natively, and in Android and iOS devices as a stand-alone app. Cortana seems to have been lagging in adoption against other digital assistants, and Microsoft’s strategy seems to be to integrate Cortana with other digital assistants, rather than competing with them. Microsoft also allows the development of third-party Skills for Cortana, but only for the US market for now. Given their strategy shift, it’s unclear whether Cortana Skills would become more widely available. Cortana uses Bing as a search engine.

Table of Contents

Voice search is not just for mobile, it’s for our everyday life

The early stages of voice search were triggered by the ever-expanding adoption of mobile phones. Google Now, the Assistants previous incarnation, was launched in 2012 and Siri launched even before, in 2011. This changed with the introduction of Alexa in 2014. The digital assistant was now living in a piece of hardware designed to never leave your home.

The digital assistants now are embedded in a wide range of devices, from phones to watches to TVs to cars. People can now interact with them in a wider range of situations. But the way we interact with the assistant is of course radically different from the usual text interface of search engines.

How voice search is different from text-based search

- More complex and longer queries. Speech recognition has evolved a lot over the past few years, and it now allows for more complex queries than just a few years ago. This drives the user to employ a more natural language for their queries and commands.

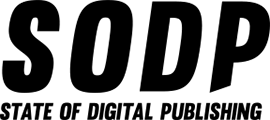

- More questions. According to research from seoClarity, more than 15% of voice searches start with how, what, where, when, why and who. This is due to the different interface compared to a text-based search engine.

- Short and concise answers. Even though the user may use longer queries to express what they want the assistant to do or look up, people expect short and clear responses.

- No visual information hierarchy. We are used to employing and detecting visual cues to organize content and highlight the most important aspects of what we want to convey to the user. The content on a landing page should be re-thought for voice-only interfaces to identify what to highlight and how to do it without the visual cues a browser allows.

- Winner takes all. When using a search engine you can skim through the search results, and even though you would rarely reach the second page of the SERP, you may well click on a link below the first three top results. In a voice interface, people don’t get a results page from where they can choose where to go. The digital assistant will return just one result.

- Local search. Considering that between 30 and 40% of mobile searches are local queries, we can also expect a high percentage of queries in voice search to ask for local results.

Keyword research for voice search

The main difference in doing keyword research for voice instead of text is that you will use much more natural language keywords. The way we speak is completely different than the way we type. We use natural phrases instead of a short string of keywords.

To discover which keywords to target, we need to use a semantic keyword research tool. Answer the public is the most commonly used tool in these cases. Insert your seed keyword and you will get a list of questions with that keyword. The data is presented first in a graph, which is pretty, but not very useful. Fortunately, you can download the data in a handy CSV.

You can also take note of the “People also ask” snippet in Google Search to uncover more keyword opportunities.

If you have a call center or a live chat or chatbot feature, mine the data from those conversations to find the most commonly asked questions.

‘Near me’ queries

‘Near me’ queries

As local queries make a big share of voice search, you can expect a lot of queries ending with the phrase “near me”. As in: “what’s the best sushi near me”, or “what’s the best gym near me”. How to optimize for that? Local businesses should have their data updated in directories like Yelp, review sites like Tripadvisor and services like Kayak. For publishers, structured data is the answer.

How to optimize your content for voice search

Optimize your site for mobile

Most of the optimizations you should make to your site to ensure it performs well in mobile search will be beneficial for your voice search rankings as well. One of the most important factors is site speed. Whether the interface is voice or a mobile device, people expect fast results.

Use AMP and structured data

Structured data is key to help Google better understand and analyze your content so that it can better provide your audience with the answers they are looking for. You can help Google identify people, organizations, events, recipes, products, places.

AMP stands for Accelerated Mobile Pages. It’s an open-source project launched by Google that restricts the functionality of web pages to increase their speed dramatically. AMP is often used with structured data, as that’s what allows AMP pages to be featured in rich results on the search results page.

Additionally for publishers, displaying your content through the AMP format with structured data is one of the requirements to build an Action for Google Assistant.

There is a structured data schema called “speakable”, currently in beta, that identifies sections on an article that are suited for text-to-voice playback. Content tagged with this schema will be identified by the Google Assistant as content that can be read through a Google Assistant-enabled device. The content is attributed to the source and the URL is sent to the mobile device of the user.

This structured data schema is only available for English-speaking users in the US, through publishers which are in Google News.

Answer user questions concisely

According to research from Backlinko, the typical voice search result is just 29 words long, but the word count of a voice search results page is 2,312 words. This is not contradictory. In the first case, we’re talking about the digital assistant answering a specific question or query. In the second case, the word count refers to the source of the answer. It’s unclear whether Google favours long-form content as a mark of quality or if it’s just that more content means there are more chances that a page is used as an answer to a query.

Either way, what these two stats combined tell us is that we need to think about the structure of our content so that we address the main ideas and key takeaways in short paragraphs that can be picked up by a voice assistant.

Write content that is easy to read and understand

If you want your content to be used in voice interaction it needs to be easy to read, and most of all, easy to understand to your readers. Remember that users won’t be able to use visual cues or elements to better understand your content, like headlines or graphs.

Remember that most queries will be done using natural speech-language as if they were having a conversation. Keep that in mind when developing your content and write conversationally when answering specific questions.

Aim for a high rank and featured snippets

This is a case of correlation and not causation. But there’s evidence, as analyzed by Backlinko in their voice results research in Google Assistant, that more than 75% of voice search results come from the top three positions in the SERP, and that 40% came from a featured snippet.

This is just more proof that Google Assistant and other digital voice assistants will favor highly authoritative results to ensure they satisfy the user query on their first answer.

As featured snippets are already short answers to specific questions, it makes sense for the Assistant to use those.

This means that your optimization efforts for Google search will also have a measurable impact on how your content is used by a digital voice assistant.

Analyze and answer the user intent

Identify what’s the user intent you are providing content for. Three main user intents can be answered through voice search. First is getting information: what is this? how do I do this?. The second is navigating: where is this?. And the third and final intent is acting: booking a table at a restaurant, buying a pair of shoes, get a list of all the music concerts taking place this weekend.

L’Oreal has been implementing a content strategy based on answering “How to” questions. Their research shows that’s what their users are looking for using voice search queries.

Build your trust and authority

As we saw, voice search is a winner-takes-all game. A digital voice assistant won’t display a list of results but instead will provide directly an answer from those search results. As they can only provide one result, it makes sense that they tend to favor results from domains with high authority, even though they may not be the top result for that query.

Local SEO is key for voice search

A high volume of voice search queries is for local results. Optimizing for local SEO queries is not much a matter of churning out content with local keywords as maintaining a healthy and updated presence on certain services and directories. For example, local businesses should claim their listing on Google My Business, Bing Places for Business and Apple Maps Connect. You would then be able to have a greater degree of control of the information pulled by Google Assistant, Alexa, Cortana and Siri, which also uses data and reviews from Yelp.

Publishers (and local business too) can implement structured data to highlight local elements in their content, the way Yelp and Ticketmaster do for their reviews and events.

All the main digital voice assistants allow for the creation of voice apps to allow users to interact with your content through the assistants.

Google takes it a bit further and automatically creates Actions for your content based on the structured data from your website. When that happens, your site owner, as specified in the Google Search Console data, would receive an email. Then you can claim your Action or disable it.

For example, Google will create an Action for podcasts based on their RSS feed that will allow users to find and play episodes on their devices through the Assistant. How-to guides, FAQs and recipes also use structured data markup to auto-generate Actions.

Content from our partners

For news publishers, you have to already be included in Google News and use AMP and structured data in your articles to be eligible to auto-generate an Action.

Publishers have already started to develop partnerships with Google to develop specialized Actions. Vogue launched a feature in 2017 where users could interact with the publication in Google Home to get more information about certain stories, told by the writers themselves.

Other publishers, like Bloomberg or The Washington Post, have developed Alexa Skills that allow users to listen to daily briefs of the most important news of the day.

The Daily Mail went one step beyond, as they put their entire daily edition on Alexa. While other publishers record the audio themselves, in this case, the Daily Mail uses Alexa’s automated text-to-speech capabilities. Another difference is that in this case, the Daily Mail makes this feature available only to their current subscribers.

Can you analyze the impact of voice search?

The short answer is no. Not yet, at least. Even though Google has been saying since at least 2016 that they want to include voice search analytics to Google Search Console, the fact is that, as of today, there’s no way to analyze voice search queries and results.

There are a few challenges that prevent Google and other analytics providers to deliver this feature:

- The first is that natural language queries tend to be longer than keyword-based queries. And people will formulate what is essentially the same query using different words or sentence constructions. This means that the same query will have lots of low-volume variations, which makes it difficult to analyze and extract meaningful insights.

- The second challenge is that voice search queries are often chained together, as if in a conversation. For example, you can ask a digital voice assistant: “Who is Stephen Curry?”. The assistant will return a summary of the NBA-star achievements. You can ask them “How tall is he?”, and the assistant will know that you’re referring to Stephen Curry. The problem gets more complicated when you realize that both queries could be answered by the assistant using two different content sources.

Any voice search analytics functionality needs to take into account both challenges and:

- Provide a way to cluster together similar queries, while at the same time giving analysts the freedom to explore what are those variations, to better understand the user’s language.

- Show conversational trees to understand how users navigate the information and which queries keep them in our content and which ones result in the query being answered with content from other sites.

Publishers that want to understand how voice search is impacting their online presence could start by analyzing the queries that get people on your site and look for longer, more conversational queries, as well as queries that are formulated as a question.

You could also conduct tests with different digital voice assistants to check what sources they use for their answers (voice assistants start their answers with “according to…”), and how that correlates with SERPs on Google search.

Voice search is already our present

The shift in consumer habits and the increasing role smart devices and digital assistants are playing in our everyday lives mean that voice search is not a thing of the future. Is already a present we need to deal with.

Publishers need to take into account the interface change from text and visual references to just voice. It’s a shift that changes the way people access and consume our content. The lack of reliable analytics to understand voice search performance makes this objective difficult to achieve. But, as with any other facet of search, it all comes down to developing trust with your audience through authoritative, high-quality content.